In the rapidly evolving landscape of artificial intelligence, the question on every builder's mind is no longer "Can AI do this?" but "How do we build a product that is truly differentiated?" With powerful Large Language Models (LLMs) from OpenAI, Anthropic, and Google becoming increasingly commoditized, the competitive advantage of simply having AI capabilities is evaporating. Connecting a model like GPT-4 to a user's data via an API is now table stakes.

If your product is fundamentally a "Prompted Agent"—a system that waits for a user's command, researches a connected dataset, and returns an answer—you're not building a moat; you're building on rented land. The real, defensible value in 2025 and beyond will be found in creating "Proactive Agents" that possess their own opinionated, intelligent systems.

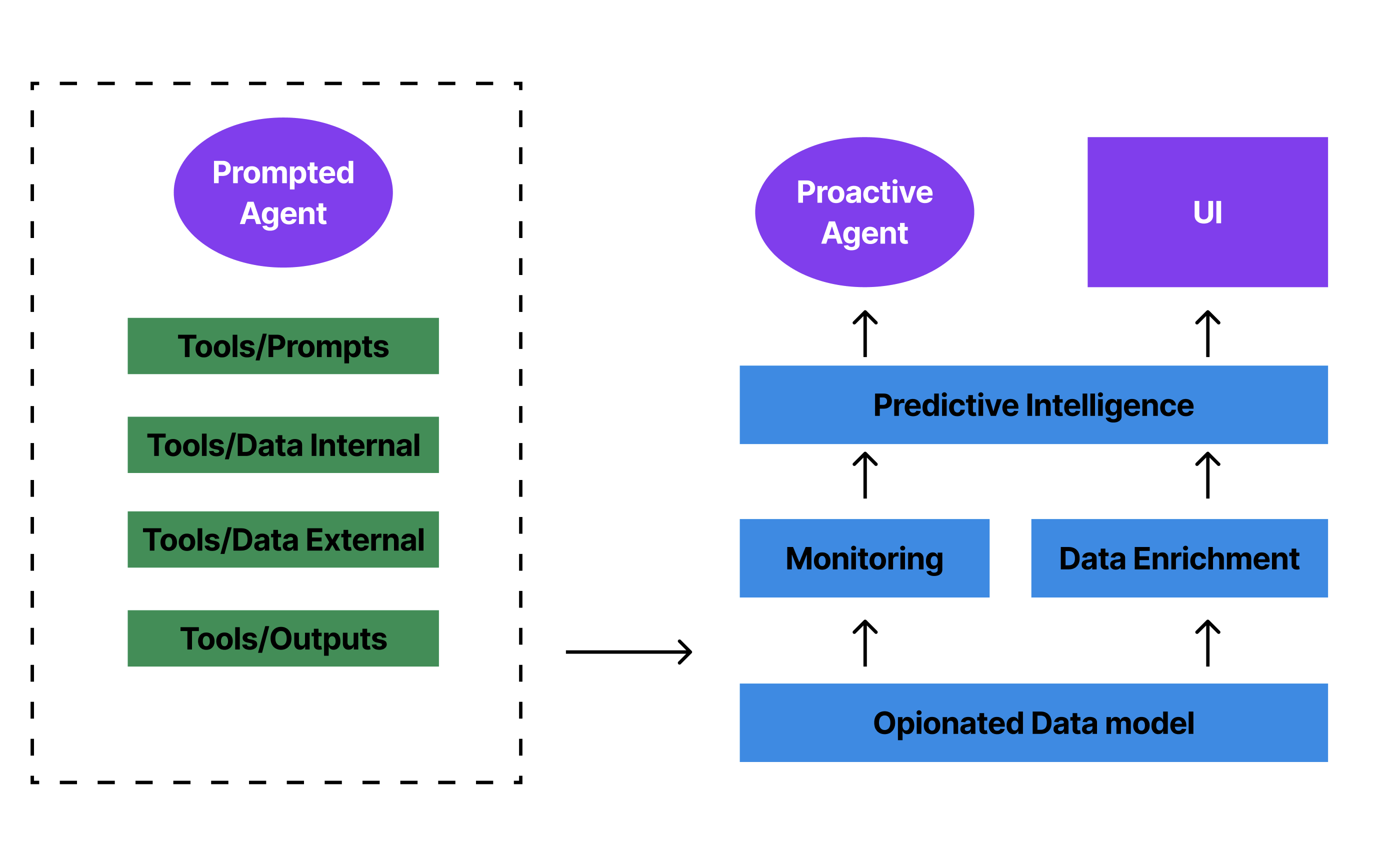

This diagram illustrates the architectural shift required:

On the left, we have the common "Prompted Agent." On the right, a "Proactive Agent" architecture designed for differentiation. Let's break down why this shift is critical.

The Commodity Stack: The "Prompted Agent"

The Prompted Agent is a reactive system. A user provides a prompt, and the agent uses a set of tools to access internal data (like files in a Google Drive) or external data (like public websites) to generate a response. This is the core of how custom GPTs and many first-generation "AI features" work.

While useful, this approach has fundamental limitations:

- It's Inefficient: The system treats every query like a new research project. If you ask, "What is the status of my active sales contracts?" it must scan all relevant documents every single time. It doesn't learn from past queries or build a persistent understanding of your business.

- It Lacks Deep Context: The agent's knowledge is stateless and shallow. It might remember facts from a conversation, but it doesn't understand the intricate relationships between your data—how one clause in a legal document creates a dependency in another, or how a metric in your CRM data relates to your financial projections.

- It's Not Built for Long-Term Goals: The entire system is designed to "answer the current question." It's not designed to help a user achieve a larger, long-term objective, like "keep my company's legal costs low" or "minimize deal risk." The user bears the entire cognitive load of what to ask and when.

This model ultimately competes with ChatGPT itself, a battle that is incredibly difficult to win.

The Differentiated Stack: The "Proactive Agent"

To build a defensible product, you must move beyond prompts and build an intelligent system around the AI. This is the "Proactive Agent," an application with its own point of view on how to solve a user's problem.

This architecture is built on a stack of compounding value:

1. The Opinionated Data Model

This is the foundation. Instead of just pointing an LLM at a folder of raw files, you build a structured, canonical representation of the domain. This isn't just basic Retrieval-Augmented Generation (RAG); it's about systematically extracting metadata, relationships, and concepts into a proprietary data model.

For an "AI Lawyer" application, this model wouldn't just be a collection of .docx files. It would be a graph of all your legal agreements, with nodes representing companies, people, specific clauses (like IP rights or liability caps), effective dates, and the dependencies between them. This data model becomes your core intellectual property.

2. Monitoring & Data Enrichment

A Proactive Agent's data model is not static; it's alive.

- Monitoring: The system should automatically detect and ingest new information. When a new contract is signed and saved to a specific folder, it's automatically processed and integrated into the data model without user intervention.

- Data Enrichment: The system actively enriches its data model with external context. For an AI that helps with M&A due diligence, it could ingest a target company's data room and then spawn agents to continuously scan the web for news, press releases, or market changes related to that company, feeding the insights back into the central model.

3. Predictive Intelligence

Once you have a rich, living data model, you can move from reactive answers to proactive, predictive insights. Because the system understands the "ideal state" defined by its model, it can flag deviations and opportunities automatically.

Imagine reviewing 50 documents in a data room. A Prompted Agent can only answer what you ask it. A Proactive Agent can automatically flag inconsistencies without being asked: "Warning: The liability cap in the Master Services Agreement ($1M) does not match the cap cited in the Statement of Work #3 ($500k)." This is intelligence that the user doesn't even know to ask for.

4. A Purpose-Built User Interface & Agent

The output of this intelligent system cannot be confined to a chat box.

- Workflow UI: Differentiation is achieved through a dedicated user interface that visualizes the data model and its insights. Instead of asking about employment clauses, a user visits a dashboard that shows every employee, their contract status, and automatically flags any non-standard terms. This becomes an indispensable workflow tool.

- Proactive Agent: The agent can now initiate contact. It can send an email or a Slack notification: "The new sales agreement with Acme Corp is using a non-standard IP clause. We recommend revising it before sending for signature." The interaction model is flipped from pull to push, delivering value before the user even forms a question.

From Answering Questions to Owning the Job

Building a differentiated AI product in 2025 is not about having a slightly better model or a cleverer prompt. It's about taking on the responsibility of a "job to be done."

The Prompted Agent is a tool that helps a user do their job. The Proactive Agent takes ownership of the job itself. It doesn't just help a lawyer review contracts; it takes on the role of managing the company's legal risk. It doesn't just help an analyst research a company; it becomes the single source of truth for that company.

This requires building a truly opinionated product with its own data model, its own intelligence, and its own workflows. It's more work, but it's the only way to build something that lasts.